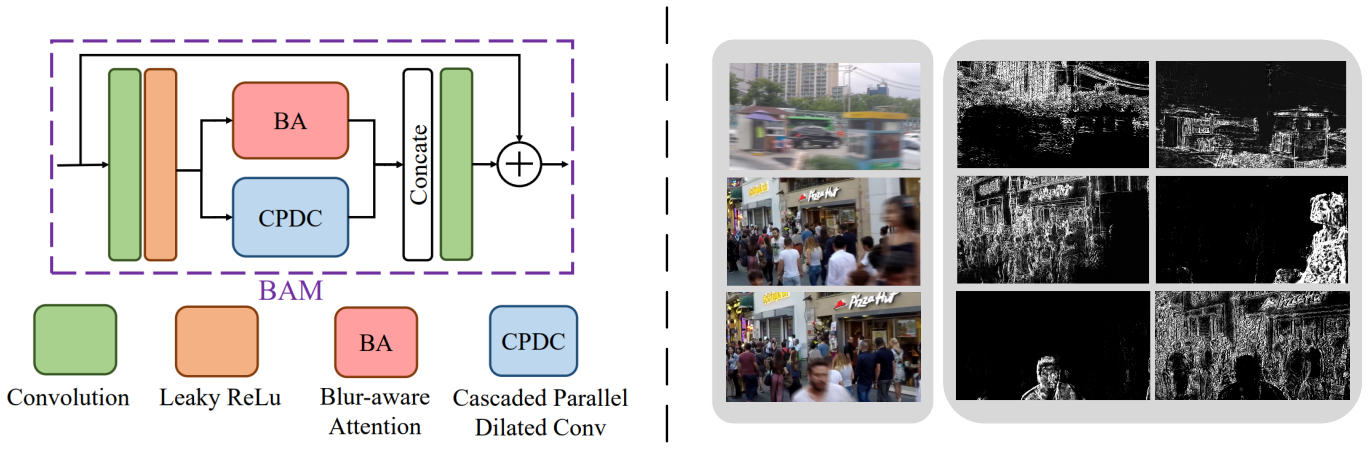

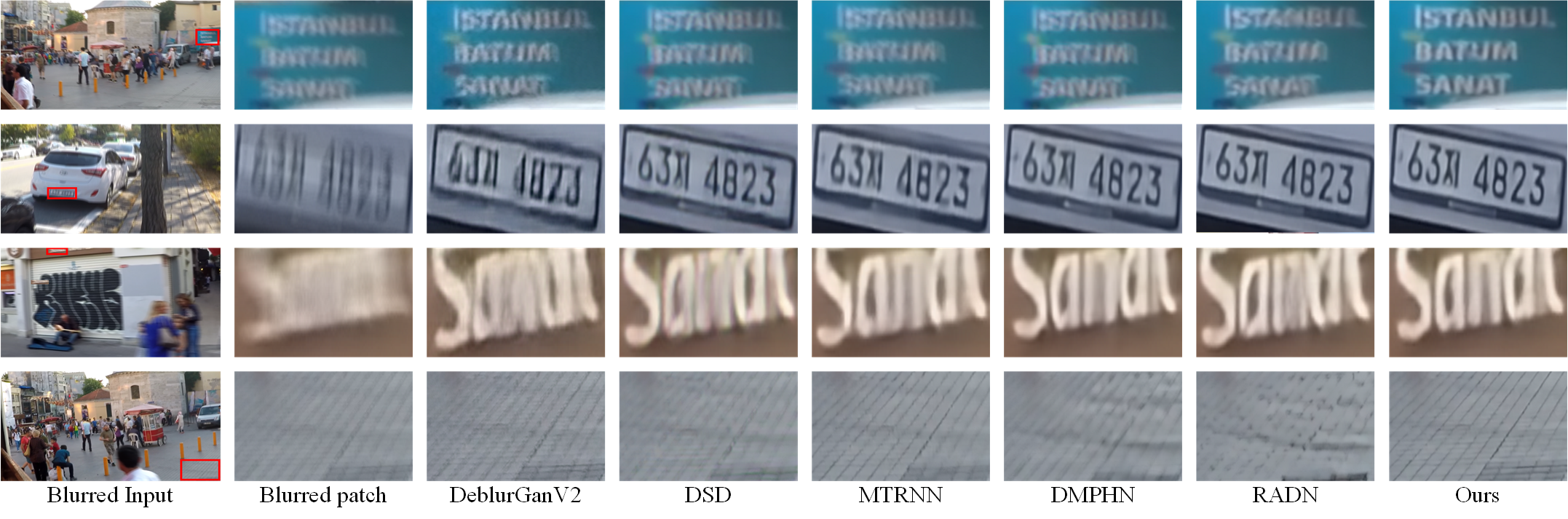

Image motion blur usually results from moving objects or camera shakes. Such blur is generally directional and non-uniform. Previous research efforts attempt to solve non-uniform blur by using self-recurrent multi-scale or multi-patch architectures accompanying with self-attention. However, using self-recurrent frameworks typically leads to a longer inference time, while inter-pixel or inter-channel self-attention may cause excessive memory usage. This paper proposes blur-aware attention networks (BANet) which accomplish accurate and efficient deblurring via a single forward pass. Our BANet utilizes region-based self-attention with multi-kernel strip pooling to disentangle blur patterns of different magnitudes and orientations and with cascaded parallel dilated convolution to aggregate multi-scale content features. Extensive experimental results on the GoPro and HIDE benchmarks demonstrate that the proposed BANet performs favorably against the state-of-the-arts in blurred image restoration and can provide deblurred results in real-time.

Fu-Jen Tsai*, Yan-Tsung Peng*, Yen-Yu Lin, Chung-Chi Tsai, and Chia-Wen, "BANet: Blur-aware Attention Networks for Dynamic Scene Deblurring", arXiv preprint arXiv:2101.07518, 2021.

@inproceedings{BANet,

author = {Tsai, Fu-Jen* and Peng, Yan-Tsung* and Lin, Yen-Yu and Tsai, Chung-Chi and Lin, Chia-Wen},

title = {BANet: Blur-aware Attention Networks for Dynamic Scene Deblurring},

booktitle = {arXiv preprint arXiv:2101.07518},

year = {2021}

}

- • Nah et al. Deep Multi-scale Convolutional Neural Network for Dynamic Scene Deblurring. In CVPR, 2017. • Tao et al. Scale-recurrent network for deep image deblurring. In CVPR, 2018.

- • Gao et al. Dynamic Scene Deblurring with Parameter Selective Sharing and Nested Skip Connections. In CVPR, 2019. • Kupyn et al. Dynamic Scene Deblurring with Parameter Selective Sharing and Nested Skip Connections. In ICCV, 2019.

- • Zhang et al. Deep Multi-scale Convolutional Neural Network for Dynamic Scene Deblurring. In CVPR, 2019.

- • Hou et al. Strip Pooling: Rethinking Spatial Pooling for Scene Parsing. In CVPR, 2019.

- • Park et al. Multi-Temporal Recurrent Neural Networks For Progressive Non-Uniform Single Image Deblurring With Incremental Temporal Training. In ECCV, 2020.

- • Purohit et al. Region-Adaptive Dense Network for Efficient Motion Deblurring. In AAAI, 2020.

- • Suin et al. Spatially-Attentive Patch-Hierarchical Network for Adaptive Motion Deblurring. In CVPR, 2021.